Multidisciplinary Research and Education on Big Data + High-Performance Computing + Atmospheric Sciences

Part of NSF Initiative on Workforce Development for Cyberinfrastructure (CyberTraining)

Background

HPC and Big Data Requirements for Atmospheric Sciences

Clouds play an important role in Earth’s climate system, particularly its radiative energy

budget. On one hand, clouds reflect a significant fraction of incoming solar radiation back to

space, which exerts a cooling effect on the climate. On the other hand, same as greenhouse

gases, clouds absorb thermal radiation from earth's surface and re-emit a lower temperature,

which has a warming effect on the climate. In addition, clouds are also an important chain of

the Earth’s water cycle and play center role in aerosol-cloud-radiation interactions.

Because of the important role of clouds in the climate system, a realistic and accurate

representation of clouds in the numerical global climate model (GCM) is critical for simulating

the current and future climate. However, at present there is a significant difference among the

current generation of GCMs on the prediction of whether and to what extent the global warming

induced cloud changes would accelerate or dampen the warming. The recent Intergovernmental Panel

on Climate Change (IPCC) scientific reports have identified the cloud feedback be one of the

largest uncertainties in our projection of future climate.

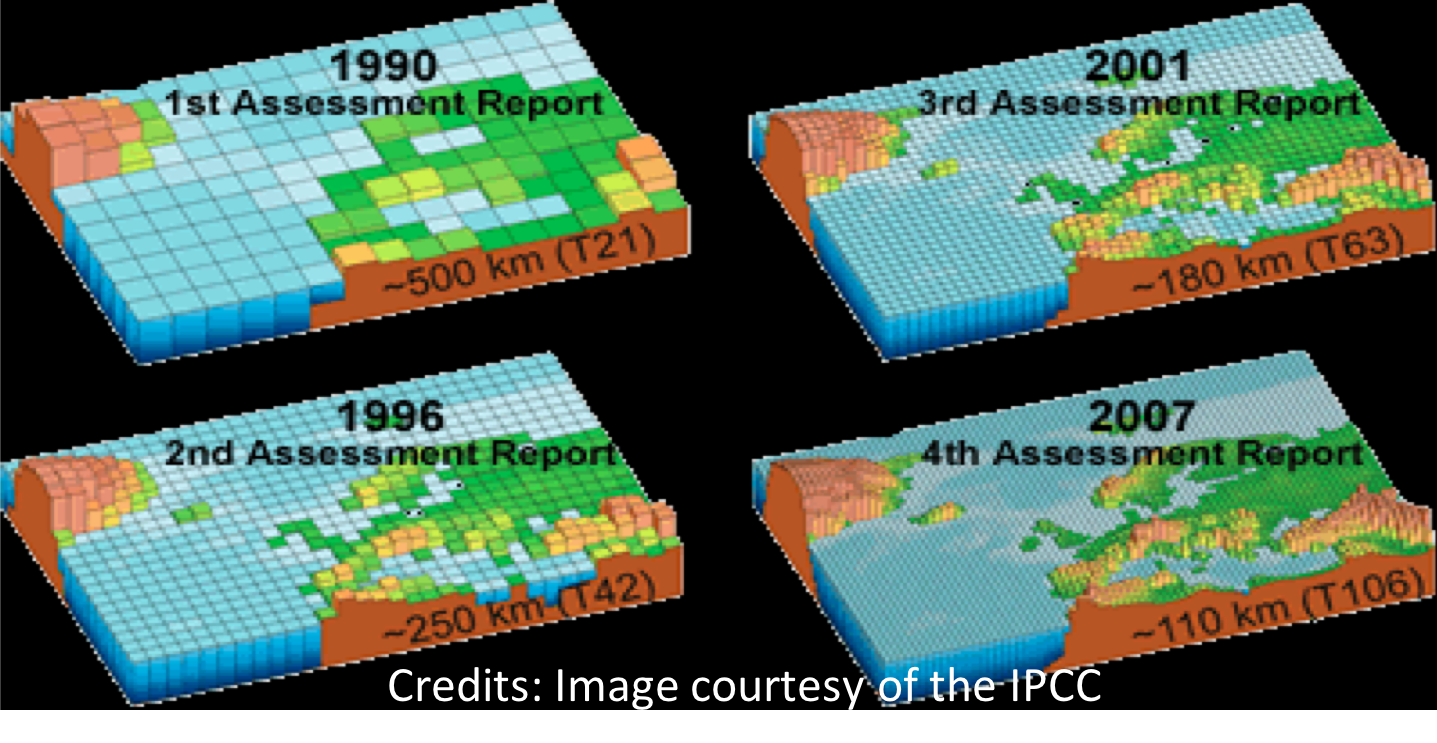

HPC requirements for cloud simulation in GCMs. It is extremely challenging to simulate

clouds in GCMs realistically and accurately for two main reasons. First, many cloud-related

processes, such as turbulence and convection, cloud droplet activation and growth, and

transportation of radiation in clouds, occur at the spatial scale much smaller than the typical

grid size of conventional GCMs (~100 km). New techniques, such as cloud super-parameterization

embeds cloud-resolving models with resolution around 1 km inside of the conventional GCMs,

have been developed aiming to solve this problem. However, such new techniques usually come with

high computational cost, which more than ever makes HPC an indispensable tool for climate

modeling. Another important reason is that many processes are modeled using highly simplified

methods even though compressive methods are available to avoid the high computational cost. For

instance, in the current paradigm, clouds are simply approximated as “plane-parallel”

one-dimensional (1D) column, even though such approximation has been known to cause significant

errors in atmospheric radiation and remote sensing computations. Over the past decade, a number

of 3D radiative transfer models have been developed. These new models, together with the fast

growth of HPC resources, have given rise to emerging opportunities to shift the paradigm from 1D

plane-parallel to 3D realistic simulation of the radiative transfer and cloud-radiation

interactions.

Big Data analytics requirements for evaluation of GCM using multi-decadal satellite

observations. The performance and reliability of GCMs are evaluated through comparisons

of model simulations with measurements. Traditionally, measurements of the atmosphere made at

the weather stations are sparse, especially over oceans, and unevenly distributed. The advances

of satellite-based remote sensing techniques have led to a revolutionary change in our way to

observe and measure the state of atmosphere. Now, satellite-based measurements of global cloud

properties have become an important data source for evaluating cloud simulations in GCMs.

Satellite remote sensing has also led to an astronomically growing amount of data. For example,

the measurements from the Moderate Resolution Imaging Spectroradiometer (MODIS) are widely used

for GCM evaluation. MODIS takes measurements of the radiation reflected and emitted by

earth-atmosphere in 36 spectral bands continuously over a swath width of 2,330 km. Since its

launch in 1999, MODIS has made continuous measurements for almost two decades, which are

invaluable for understanding climate variability and trend. However, the tremendous volume of

data amount (~500 TB raw data, ~PB processed data) has become a difficult obstacle for making

full use of MODIS data records.